Does ChatGPT give the same answers to everyone? Is AI reliable?

Is AI reliable? This is a question asked by so many people nowadays.

In this article we will explore how and why responses differ from one user to another, and you will learn how to control AI, so it works only the way you want.

Is it a bug, or is it a feature? Let’s jump right in!

I. Introduction

In an age where algorithms power everything from playlists to personalized ads, it’s tempting to assume that AI always plays it straight, same question in, same answer out.

But that assumption falls apart the moment you spend time with ChatGPT.

After having a conversations with some friends about some nerd stuff, we all said “Let’s ask ChatGPT!” and we all did it at the same time.

Guess what? Three different answers popped up, and two of them were not even close to the other…

So let’s ask the big question: Does ChatGPT really give the same answers to everyone?

Short answer? No.

Long answer? No, and that’s entirely intentional.

ChatGPT isn’t built to parrot back canned responses. Even though many people use it for research and it would’ve been nice to have some consistency there.

Instead, it’s engineered to generate content dynamically, tailored in real time to context, phrasing, and even your conversation history. (if you have conversation history enables)

What you’re engaging with isn’t just software; it’s a probabilistic powerhouse built to adapt constantly to your inputs.

II. The Nature of Language AI: Reliable, Not Reliable? Generative, Not Database-Driven

At its core, ChatGPT is a generative language model, which means it doesn’t pull from a static vault of prewritten answers.

Fun fact During an MIT event, Altman was asked if GPT‑4 training cost $100 million and he replied: “It’s more than that.”

Now, put this in context with the latest models like GPT-o3 and GPT-o1 which are many magnitudes larger, and much more expensive to train and to run.

It creates responses on the fly, drawing from patterns it learned during its extensive training across books, articles, websites, and more.

This is where it sharply diverges from traditional search engines or knowledge databases.

Google might retrieve the same top result for a question like “What’s the capital of France?” every single time. Because it has a deterministic algorithm.

ChatGPT, on the other hand, is predicting the next most probable words based on your input, your tone, and your context, even your chat history if it’s in a single session. (and all your other conversations if you enabled this feature)

Because of this real-time generation, variation isn’t just possible, it’s expected.

The same question, phrased differently, or even asked by two different users in slightly different contexts, can produce subtly (or dramatically) different answers.

It’s not a flaw; it’s a feature. This dynamic adaptability is what allows ChatGPT to feel human, relevant, and contextual, even if it sometimes means no two answers are exactly alike.

III. Key Reasons ChatGPT & AI Responses Vary

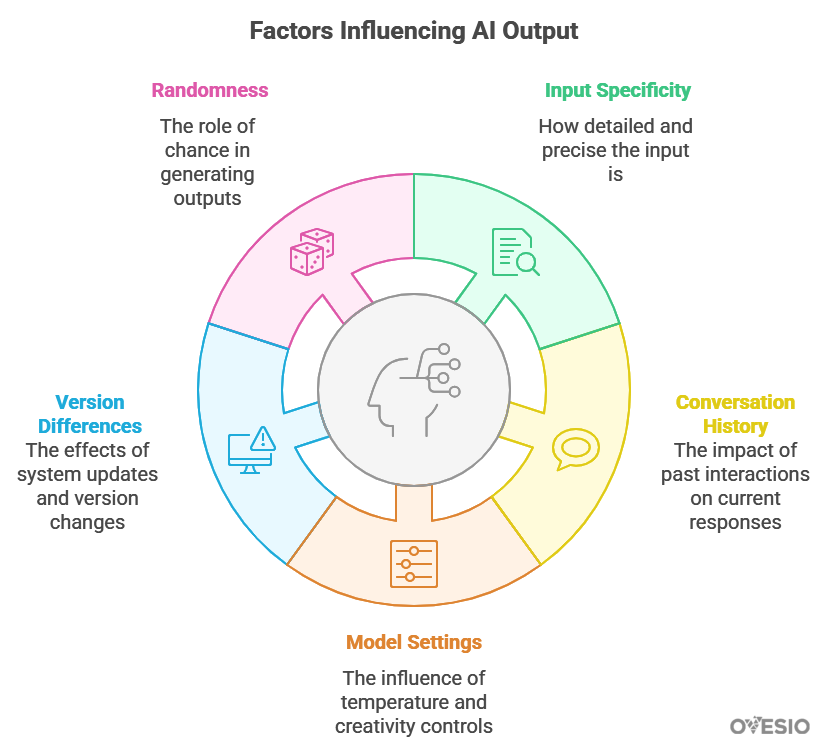

1. Input Specificity & Contextual Nuance

ChatGPT is deeply sensitive to how questions are phrased.

Ask it “What’s the capital of France?” and you’ll get a quick, clean “Paris.”

But ask “What was the capital of France during the Enlightenment?” or “What’s France’s capital known for culturally?”, and suddenly, the answer shifts in tone, depth, and direction.

It’s not just about the topic, it’s about the texture of your prompt.

A vague question opens the door to interpretation.

A richly detailed prompt, on the other hand, signals intent and guides the model more precisely.

In short: the more specific you are, the more specific ChatGPT becomes, and that’s why two users asking similar but not identical questions can walk away with very different responses.

For example, if you ask ChatGPT “How much is x in 2+x=10” it will give you an answer, and most probably it will explain how it got there.

But if you specific “answer in only one word” it will answer with the result only.

2. Conversation History Effects

Another invisible force shaping ChatGPT’s answers? Your chat history.

The model uses prior messages in a session (and not only) to create context.

Ask “What’s a good way to reduce stress?” and later follow up with “What about diet?”, ChatGPT remembers what you told him previously. It tailors the second response to your earlier question.

But here’s the twist: start a new session, and that same second question, “What about diet?”, has no context. It becomes a blank slate, and the answer shifts accordingly.

Even if you have the memory function turned on, it won’t be as specific as the previous chat.

This means even you can get different answers from ChatGPT just by asking at different points in a conversation, or in a fresh session.

Multiply that across different users and you begin to see why ChatGPT’s answers are rarely copy-paste clones.

3. Model Settings: Temperature & Creativity Controls

Behind the scenes, ChatGPT has knobs and dials, one of the most impactful being temperature.

Temperature controls the model’s creativity.

A low temperature (like 0.2) keeps things tight and factual.

It favors safe, predictable responses, great for consistent outputs.

A high temperature (like 0.9) introduces more randomness, enabling more creative or diverse replies. This is often used for storytelling, brainstorming, or idea generation.

So when one user gets a concise answer and another gets a quirky, poetic take, it’s not necessarily inconsistency. It could be a difference in temperature settings, even if the prompt was the same.

You can set the temperature by telling ChatGPT in the chat: “Set temperature to 0.2” and input your desires value there.

4. Version Differences & System Updates

ChatGPT is not static software, it’s an evolving system. Different users may be accessing different versions of the model depending on the platform (e.g., GPT-3.5 vs. GPT-4), plan tier, or update cycle.

Just like apps or browsers, AI models are updated regularly. These changes can affect not just how the model answers, but how it thinks, introducing new information, improving tone control, or fine-tuning behavior.

Translation: a question you asked last month might get a noticeably different answer today, simply because the engine under the hood has been upgraded.

For example, when the first version of ChatGPT launched, you could get it to explain how to do a lot of dangerous “DIY projects” to put it lightly 🙂

Now, when you ask ChatGPT for a dangerous tutorial, it will just let you know that it cannot provide such information.

Ai is reliable when it comes to keeping you safe… at least, after these updates, lol.

5. Randomness and Probabilistic Output

Even with everything else controlled, there’s still one wildcard baked into ChatGPT’s core: randomness.

Language models don’t follow a single rigid path from prompt to answer.

They operate probabilistically, selecting each word based on the likelihood it fits, with some degree of built-in variation.

This ensures outputs feel natural and not robotic. But it also means identical prompts can yield slightly different phrasing or tone, even seconds apart.

It’s like shuffling a deck of cards from the same stack. You’ll always get cards from the same set, but the order changes, and that subtle randomness makes every interaction feel more human.

ChatGPT doesn’t think or feel, but it gives this impression thanks to this randomness.

IV. When Is AI Reliable & Gives The Same Answers

Despite all this variability, ChatGPT isn’t a wild card every time. There are moments of striking consistency, by design.

Ask it factual, unambiguous questions, “What’s 5 + 5?”, “What is the capital of Japan?”, or “Who wrote 1984?”, and the answer is clear, correct, and nearly always identical across sessions and users.

This is because the model has extremely high confidence in well-established facts, where deviation offers no benefit.

When accuracy matters, predictability kicks in.

This makes ChatGPT a reliable tool for quick lookups, standardized definitions, and general knowledge.

It’s only when you veer into open-ended, creative, or subjective territory that the kaleidoscope of variability really comes alive.

So if you want to get precise answers, make sure you give no room for interpretation, or creativity in the questions you ask.

V. Implications of This Variability

💡 Is AI reliable for General Users:

Expect flexibility, not robotic repetition.

If you’re just brainstorming, or satisfying a burst of curiosity, variability is your ally.

It keeps the conversation fresh, nuanced, and human-like.

Just don’t expect ChatGPT to sound like a scripted FAQ every time, you’ll get personalized responses that reflect the way you ask.

Whether you’re researching a topic, writing a poem, or asking follow-up questions, the model is built to roll with your rhythm.

Remember, ChatGPT is just a tool, and in case it gave the wrong answer in the wrong format, it doesn’t mean it is dumb. You just asked the wrong question in the wrong format.

If you’re looking for consistent results, some prompting frameworks will help you.

Here’s a great list of 10 ChatGPT Prompt Engineering Frameworks You Need To Know

But is it enough to make AI reliable? Yes!

📈 Is AI reliable for Businesses & Professionals:

Control the chaos. Guide the output.

When consistency matters, like drafting marketing copy, support replies, or SEO content, unpredictability can become a liability.

That’s where prompt engineering and settings control come in, and becomes even more important.

Make sure you give ChatGPT:

- Template on how to answer

- Clear instructions

- Examples of how you expect the answer

In high-stakes use cases, treat ChatGPT like a junior content assistant: powerful, fast, but still needing editorial oversight.

Even with excellent prompts, human editing is non-negotiable, especially for tone, branding, and SEO alignment.

AI can give you the idea, maybe the draft… you but always have to work on it until it becomes ready to publish.

VI. Conclusion

ChatGPT doesn’t give the same answers to everyone, and it’s not supposed to.

But this is not a reason to question weather AI is reliable or not.

From dynamic context to creative variability, from system settings to session history, everything is design to favor adaptability over uniformity.

Final thought?

Variability isn’t a bug, it’s a feature.

Guide it using frameworks like RTF (Role-Task-Format) or TAG (Task-Action-Goal) to use this variability to your advantage.

If you’re looking for advanced prompt frameworks, here’s a great list! It will help you make AI reliable for your work.

If you’re looking into learning more about AI translation, here’s a great article 5 Facts You Don’t Know About AI Translation in Marketing

Frequently Asked Questions

1. Does ChatGPT give the exact same answer to everyone who asks the same question?

Not necessarily. ChatGPT generates responses on the fly using patterns and probabilities. Even with identical prompts, the outputs can vary slightly due to its generative nature and settings.

2. Why do my results differ from someone else’s, even with the same question?

Several factors affect this: how the question is phrased, the temperature setting, the chat history, and even the version of ChatGPT you’re using. Small differences = big changes in response tone or detail.

3. Can I make AI reliable, and respond more consistently?

Yes! Use clear, specific prompts, lower the temperature setting (if configurable), and maintain a consistent prompt structure. This reduces variation and boosts reliability.

4. Is this variability a flaw in the system?

Quite the opposite. Variability is an intentional design choice that allows ChatGPT to sound more natural and adaptive, especially useful for creative or nuanced tasks.

5. How can businesses ensure consistent tone and style in ChatGPT’s output?

Use prompt templates, define brand tone guidelines within your prompt, and always review outputs with a human editor. Think of ChatGPT as a drafting assistant, not a final publisher.